(Like this article? Read more Wednesday Wisdom)

During the nineties I made most of my living teaching IT courses at the education centers of HP, Sun, and DEC. Remember them? All taken out by the hobby project of a Finnish CS student, but that’s a story for another day…

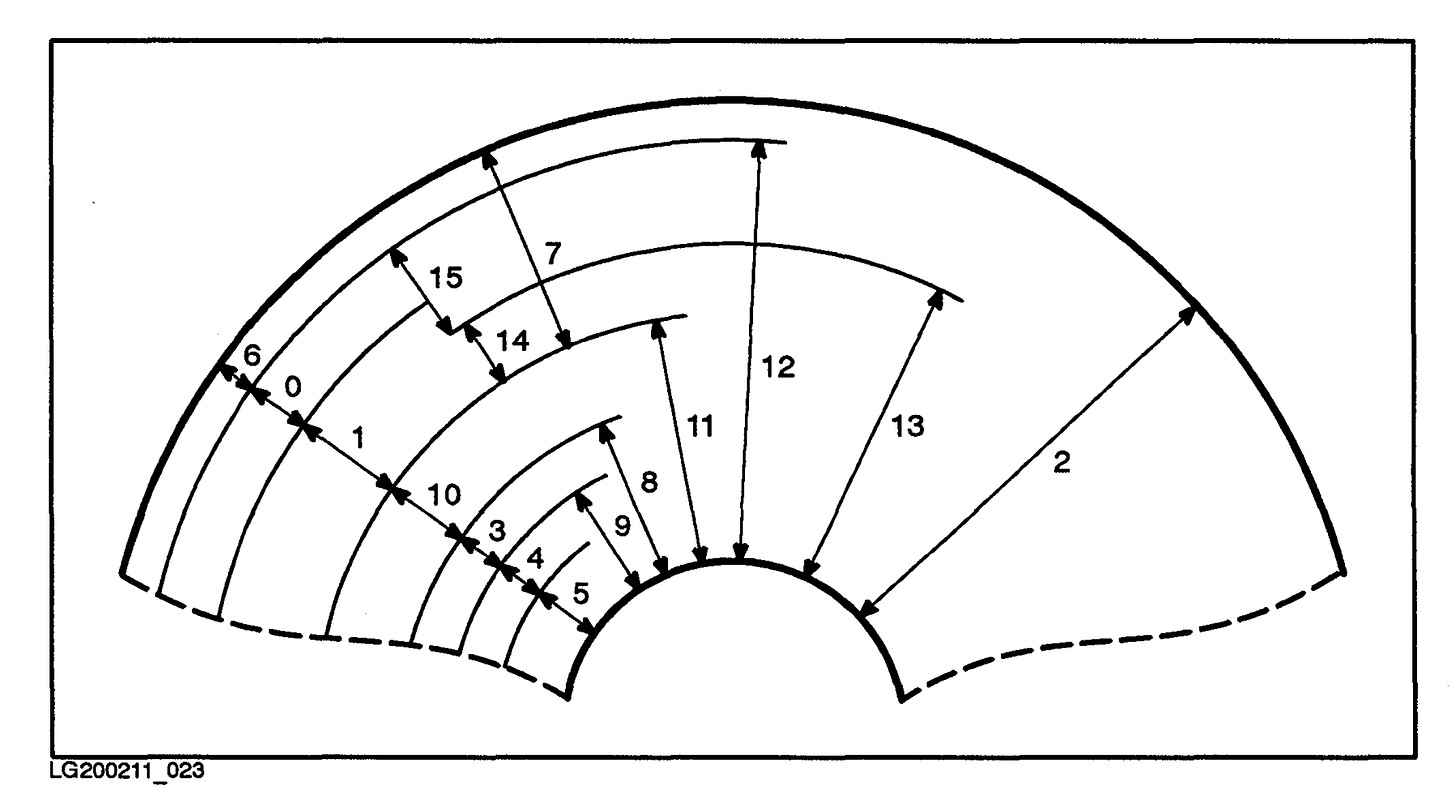

When I started teaching at HP, version 8 of their flagship operating system HP-UX was still going strong. It was a solid but somewhat outdated Unix variant. Its weirdest aspect was its disk partitioning scheme. Every disk was pre-partitioned in 16 sections of different (but predetermined) sizes and starting at some well-known cylinder on the disk. The sections were laid out in a weird way that contained overlaps (see the image below). For instance section 2 was always the entire disk, but sections 11 and 7 (together) also described the entire disk, and so did sections 13, 15, and 6.

When you wanted to use a disk, you had to choose the sections to hold swap and the various file systems. Say you wanted a 1 GB file system; you could be straight out of luck because there might only be an 800 MB section and a 1.2 GB section available. And of course you couldn’t move the sections or grow/shrink them, as their sizes and locations were fixed.

To make matters worse, the special files for all the sections were pre-created upon boot, so a single typo could literally destroy your data or crash your system because you fat-fingered a section number that overlapped other sections that already contained a file system or a swap space. Wanted to make a file system on section 7 but accidentally typed an 8? Too bad if you already used section 11 or any other section that overlapped with section 8.

The vector for mistakes was significantly strengthened when later HP-UX versions swapped the meaning of sections 0 and 2. From then on, section 0 was the entire disk and section 2 was the section sandwiched between sections 6 and 1. Another mistake you could make is choose your partitions suboptimally and then end up with gaps on your disk that you could not use because none of the preconfigured sections described that gap.

Really, who ever thought this was a good idea? It was a sad state of affairs that made nobody happy.

Then HP-UX 9 came. Among other novelties it contained a new way to group and partition disks dynamically: The Logical Volume Manager (LVM). Suddenly you could make partitions of, ….. wait for it ….. any size you wanted! You could even make partitions that spanned two disks! Or partitions that were mirrored (after buying an optional software product to enable that functionality). It was amazing and there was much rejoicing.

As one of the instructors in the HP Education Center in the Netherlands I was tasked with getting ready to teach the “HP-UX 9.0 New Features and Functions” course. One day I was sitting behind the console of a small HP9000 server together with another trainer to figure out how the new features that we were supposed to inform people about actually worked. As HP-UX 9 was hot off the press we knew pretty much nothing about it yet and so we were looking through the manuals and trying things out. At one point we directed our attention to on one of the labs which asked us to set up some logical volumes in a particular way. As my colleague looked somewhat challenged I thought about it for a second and then typed in the right commands.

He looked surprised. “How did you know how to do that?”, he asked. I looked at him equally surprised: “I just imagined how I would have implemented it and then moved forward from there.”

He was befuddled because apparently he couldn’t reason from first principles.

A first principle is a basic assumption that cannot be deduced any further. Over two thousand years ago, Aristotle defined a first principle as “the first basis from which a thing is known” (source). Using first principles means that you could invent a whole thing from scratch, just because you know in essence what is going on below all the layers of construction invention between the thing you are trying to understand and, well, the Higgs boson.

Cue a physics joke: A Higgs boson walks into a catholic church. “Go away”, the priest shouted, "we want nothing to do with the likes of you here!” The Higgs boson answered: “But how can you have a mass without me?” 🤣

I really consider myself lucky to have come to this field at a time when there was not a lot going on between me and the transistors. The operating systems of the time were typically very thin layers between the programmer and the hardware and programming languages were considered advanced if they supported multi-line if-then-else statements. For instance I only learned about the concept of a “library” that contained code that you could “link in” after a few years of programming home computers in languages like BASIC, assembler, and Forth.

Starting in such a constrained environment means that everything I learned from that point on built forth (pun intended) on an in-depth understanding of what came before it. It also means that I often can make parallels to classic solutions for the same problem, with which comes the ability to create connections between new and old technologies, which in turn creates a better understanding of what is going on, what they are trying to solve, and why this new thing is better (if and when it is, which is not always the case).

For instance when Java came with its bytecode and virtual machine, the first thing I thought was: Aha, the USCD P System in a new jacket! When Xen, VMware and other virtualization platforms appeared I could understand what it was doing in terms of IBM’s VM/370 mainframe operating system. When I learned Kubernetes I thought about it in terms of Borg, which I initially understood in terms of various Unix clustering products I had worked with.

Of course it is impossible to know everything about everything, so I am still regularly surprised and then need to dive deep in order to figure out what is going on. For instance when I started using the Unix shell, I had never ever seen anything like it and then had to spend considerable time thinking, reading, and trying things out before I understood the redirection of I/O, pipes, and filters. However I could then backtrack to what I knew about MsDOS and then retroactively I understood what Microsoft had tried to do in their command interpreter (COMMAND.COM) and whence that came.

Sometimes people make it really hard to reason from lower level principles. For instance, the cloud providers seem to do their best to explain their networking products in terms that make it almost impossible to figure out what is happening in terms of IP packets flying around. I had the most difficult time trying to figure out what AWS Private Link actually did until somebody told me: “Think of it as NAT-as-a-service.” This statement hit me like a slice of lemon wrapped around a gold brick. Suddenly the entirety of what was happening made complete sense to me; that sentence was like finding the key that unlocked the lower layers, which then allowed me to piece the puzzles together.

Reasoning from first principles is an expensive attitude. I often spend a lot of time trying to figure out how something actually works, even though there is no direct benefit to whatever I am trying to achieve. However all the knowledge thus acquired eventually came in useful somewhere else, often significantly speeding up whatever I need to do then.

I find that the biggest advantage of being able to reason from first principles is in the noble art of debugging. The question at the heart of every debugging problem is: “Why is this thing that I expect to work, not working.” The answer is inevitably that your expectations are wrong because you either don’t understand how things actually work or you assume things to be in a state that they are not in. In both of these cases you need to understand exactly how things hang together to figure out the problem (either in your understanding or in the state) and that more often than not requires thinking from first (or at least the n-mth) principle (with n being the layer where you are at and m the number of layers below n where the problem has its root cause).

As I wrote above, I consider myself lucky to have grown up in a world where reasoning from first principles was taught naturally because we operated at layer 2, or maybe 3, and there really was not a lot going on between that layer and the hardware. Today’s entrants in the field grow up in sandboxes wrapped in sandboxes and really have a large uphill battle to fight if they want to understand the whole tech stack down to the bits.

I just want to say, sometimes it rocks to be old 🙂 (we also had better music in our childhood).

Here's a 2 min audio version of "It's the principle of the thing" from Wednesday Wisdom converted using recast app.

https://app.letsrecast.ai/r/4952c132-e693-457b-87b0-497af76ccb3c